hello, 大家好, 今天给大家分享的这个本周发生在生产环境的一个真实的案例。

故障的现象是: 主库短时间内生成了大量的wal日志,触发了归档archive_command的操作,导致灾备中心的2台standby 机器同步中断。

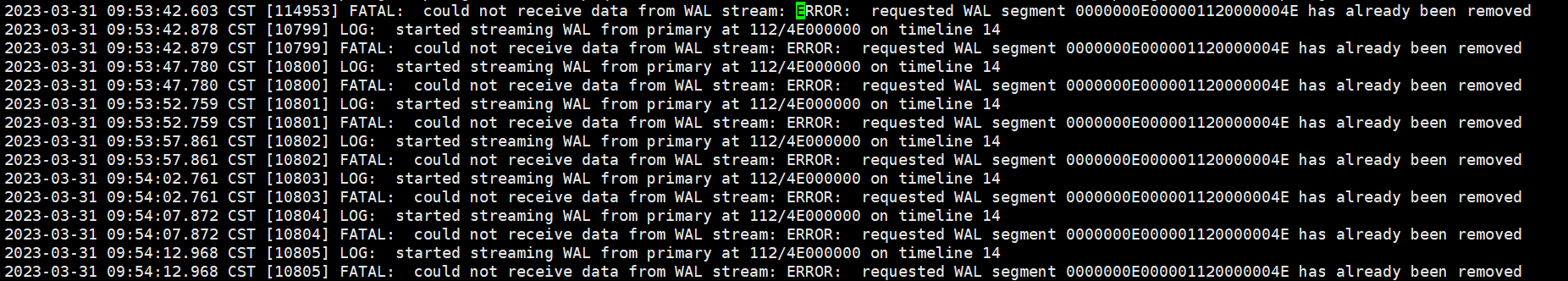

standby log:

fatal: could not receive data from wal stream: error: requested wal segment 0000000e000001120000004e has already been removed

同时也触发了主库磁盘写入异常的报警: 每秒达到了500mb

登录主库服务器,查看归档路径大小:大致产生了80gb 的wal日志 (粗略统计)

prod [postgres@whdrcsrv710 archive_wals]# du -h ./

82g ./

作为dba的第一放反应是,开发那边是不是偷偷的跑了什么大量数据处理的任务了: insert,update,delete 等操作。

我们可以用pg 原生自带的工具pg_waldump 分析一下wal 日志: 到底是是操作了那个数据库对象?

prod [postgres@wqdcsrv1318 archive_wals]# pg_waldump ./0000000e000001150000005a

rmgr: xlog len (rec/tot): 49/ 7885, tx: 0, lsn: 115/5afd40c8, prev 115/5afd21e0, desc: fpi_for_hint , blkref #0: rel 1663/8786419/8843923 blk 1950374 fpw

rmgr: xlog len (rec/tot): 49/ 7885, tx: 0, lsn: 115/5afd5f98, prev 115/5afd40c8, desc: fpi_for_hint , blkref #0: rel 1663/8786419/8843923 blk 1950375 fpw

rmgr: xlog len (rec/tot): 49/ 7885, tx: 0, lsn: 115/5afd7e80, prev 115/5afd5f98, desc: fpi_for_hint , blkref #0: rel 1663/8786419/8843923 blk 1950376 fpw

rmgr: xlog len (rec/tot): 49/ 7885, tx: 0, lsn: 115/5afd9d68, prev 115/5afd7e80, desc: fpi_for_hint , blkref #0: rel 1663/8786419/8843923 blk 1950377 fpw

rmgr: xlog len (rec/tot): 49/ 7885, tx: 0, lsn: 115/5afdbc50, prev 115/5afd9d68, desc: fpi_for_hint , blkref #0: rel 1663/8786419/8843923 blk 1950378 fpw

rmgr: xlog len (rec/tot): 49/ 7885, tx: 0, lsn: 115/5afddb38, prev 115/5afdbc50, desc: fpi_for_hint , blkref #0: rel 1663/8786419/8843923 blk 1950379 fpw

rmgr: xlog len (rec/tot): 49/ 7885, tx: 0, lsn: 115/5afdfa20, prev 115/5afddb38, desc: fpi_for_hint , blkref #0: rel 1663/8786419/8843923 blk 1950381 fpw

rmgr: xlog len (rec/tot): 49/ 7885, tx: 0, lsn: 115/5afe1908, prev 115/5afdfa20, desc: fpi_for_hint , blkref #0: rel 1663/8786419/8843923 blk 1950380 fpw

rmgr: xlog len (rec/tot): 49/ 7885, tx: 0, lsn: 115/5afe37f0, prev 115/5afe1908, desc: fpi_for_hint , blkref #0: rel 1663/8786419/8843923 blk 1950382 fpw

rmgr: xlog len (rec/tot): 49/ 7885, tx: 0, lsn: 115/5afe56d8, prev 115/5afe37f0, desc: fpi_for_hint , blkref #0: rel 1663/8786419/8843923 blk 1950383 fpw

rmgr: xlog len (rec/tot): 49/ 7885, tx: 0, lsn: 115/5afe75c0, prev 115/5afe56d8, desc: fpi_for_hint , blkref #0: rel 1663/8786419/8843923 blk 1950384 fpw

rmgr: xlog len (rec/tot): 49/ 7885, tx: 0, lsn: 115/5afe94a8, prev 115/5afe75c0, desc: fpi_for_hint , blkref #0: rel 1663/8786419/8843923 blk 1950385 fpw

rmgr: xlog len (rec/tot): 49/ 7885, tx: 0, lsn: 115/5afeb390, prev 115/5afe94a8, desc: fpi_for_hint , blkref #0: rel 1663/8786419/8843923 blk 1950386 fpw

rmgr: xlog len (rec/tot): 49/ 7885, tx: 0, lsn: 115/5afed278, prev 115/5afeb390, desc: fpi_for_hint , blkref #0: rel 1663/8786419/8843923 blk 1950388 fpw

rmgr: xlog len (rec/tot): 49/ 7885, tx: 0, lsn: 115/5afef160, prev 115/5afed278, desc: fpi_for_hint , blkref #0: rel 1663/8786419/8843923 blk 1950387 fpw

rmgr: xlog len (rec/tot): 49/ 7885, tx: 0, lsn: 115/5aff1048, prev 115/5afef160, desc: fpi_for_hint , blkref #0: rel 1663/8786419/8843923 blk 1950389 fpw

rmgr: xlog len (rec/tot): 49/ 7885, tx: 0, lsn: 115/5aff2f30, prev 115/5aff1048, desc: fpi_for_hint , blkref #0: rel 1663/8786419/8843923 blk 1950390 fpw

rmgr: xlog len (rec/tot): 49/ 7885, tx: 0, lsn: 115/5aff4e18, prev 115/5aff2f30, desc: fpi_for_hint , blkref #0: rel 1663/8786419/8843923 blk 1950391 fpw

rmgr: xlog len (rec/tot): 49/ 7885, tx: 0, lsn: 115/5aff6d00, prev 115/5aff4e18, desc: fpi_for_hint , blkref #0: rel 1663/8786419/8843923 blk 1950392 fpw

rmgr: xlog len (rec/tot): 49/ 7885, tx: 0, lsn: 115/5aff8be8, prev 115/5aff6d00, desc: fpi_for_hint , blkref #0: rel 1663/8786419/8843923 blk 1950393 fpw

rmgr: xlog len (rec/tot): 49/ 7885, tx: 0, lsn: 115/5affaad0, prev 115/5aff8be8, desc: fpi_for_hint , blkref #0: rel 1663/8786419/8843923 blk 1950397 fpw

rmgr: xlog len (rec/tot): 49/ 7885, tx: 0, lsn: 115/5affc9b8, prev 115/5affaad0, desc: fpi_for_hint , blkref #0: rel 1663/8786419/8843923 blk 1950394 fpw

根据 relnode 的信息 1663/8786419/8843923: 我们可以定位到对象: app_tapp_notification.t_notification_send_record

db_tapp=# select * , pg_relation_filepath(oid) from pg_class where pg_relation_filepath(oid) like '�43923%';

oid | relname | relnamespace | reltype | reloftype | relowner | relam | relfilenode | reltablespace | relpages | reltuples | relallvisible | reltoastrelid | relhasindex | relisshared | relpersistence | relkind | relnatts | relchecks | relhasr

ules | relhastriggers | relhassubclass | relrowsecurity | relforcerowsecurity | relispopulated | relreplident | relispartition | relrewrite | relfrozenxid | relminmxid | relacl | reloptions | relpa

rtbound | pg_relation_filepath

--------- ---------------------------- -------------- --------- ----------- ---------- ------- ------------- --------------- ---------- --------------- --------------- --------------- ------------- ------------- ---------------- --------- ---------- ----------- --------

----- ---------------- ---------------- ---------------- --------------------- ---------------- -------------- ---------------- ------------ -------------- ------------ --------------------------------------------------------------------------------- ------------ ------

-------- ----------------------

8843923 | t_notification_send_record | 8843893 | 8843925 | 0 | 8786421 | 2 | 8843923 | 0 | 10540222 | 1.0176233e 08 | 0 | 8843934 | t | f | p | r | 23 | 0 | f

| f | f | f | f | t | d | f | 0 | 166107848 | 87151 | {tapprw=arwddxt/tapprw,tappro=r/tapprw,tappro_po=r/tapprw,tapp_dst_ro=r/tapprw} | |

| base/8786419/8843923

业务上来说,这个表是个超级大表,记录app推送消息的内容。

db_tapp=# select

db_tapp-# schemaname,

db_tapp-# relname,

db_tapp-# n_live_tup,

db_tapp-# n_dead_tup,

db_tapp-# to_char(coalesce(last_vacuum,null,'1900-01-01'),'yyyy-mm-dd hh24:mi:ss') as last_vacuum,

db_tapp-# to_char(coalesce(last_autovacuum,'1900-01-01'),'yyyy-mm-dd hh24:mi:ss') as last_autovacuum,

db_tapp-# to_char(coalesce(last_analyze,'1900-01-01'),'yyyy-mm-dd hh24:mi:ss') as last_analyze,

db_tapp-# to_char(coalesce(last_autoanalyze,'1900-01-01'),'yyyy-mm-dd hh24:mi:ss') as last_autoanalyze,

db_tapp-# vacuum_count,

db_tapp-# autovacuum_count,

db_tapp-# analyze_count,

db_tapp-# autoanalyze_count from pg_stat_user_tables where relname = 't_notification_send_record';

schemaname | relname | n_live_tup | n_dead_tup | last_vacuum | last_autovacuum | last_analyze | last_autoanalyze | vacuum_count | autovacuum_count | analyze_count | autoanalyze_count

----------------------- ---------------------------- ------------ ------------ --------------------- --------------------- --------------------- --------------------- -------------- ------------------ --------------- -------------------

app_tapp_notification | t_notification_send_record | 108667382 | 79759 | 1900-01-01 00:00:00 | 1900-01-01 00:00:00 | 1900-01-01 00:00:00 | 2023-01-11 10:09:42 | 0 | 0 | 0 | 5

(1 row)

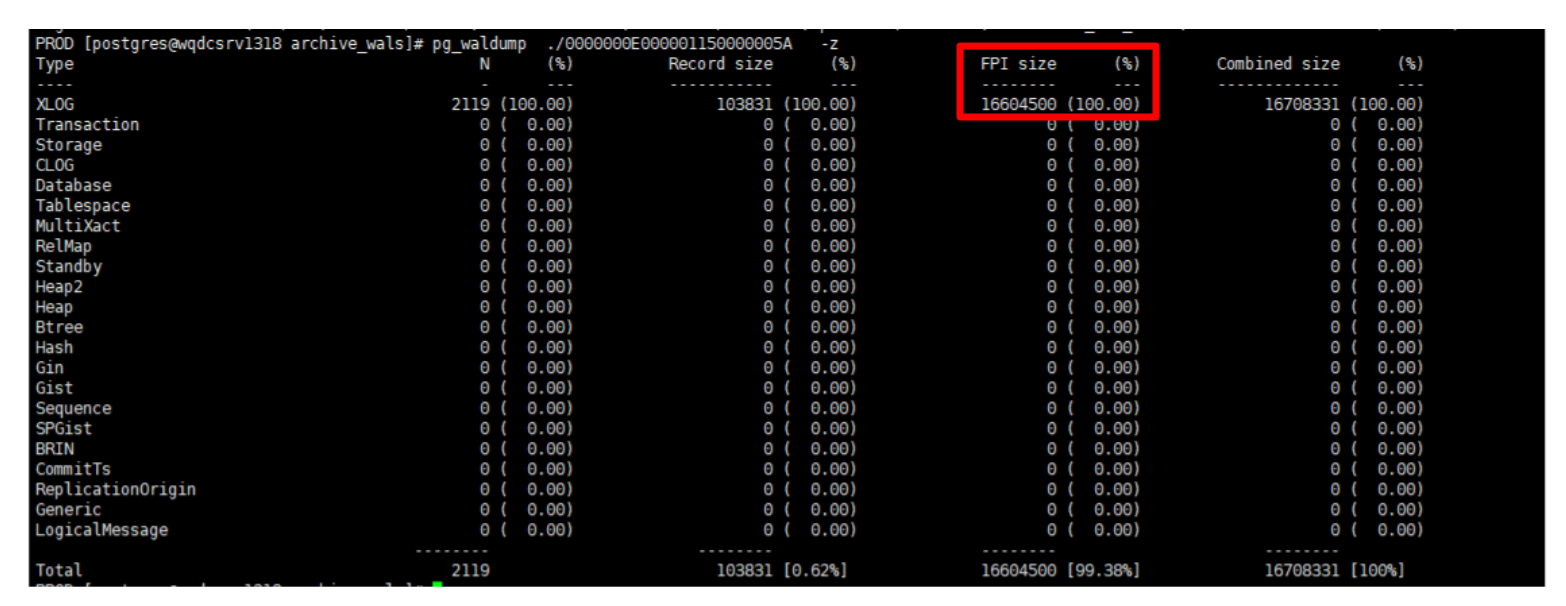

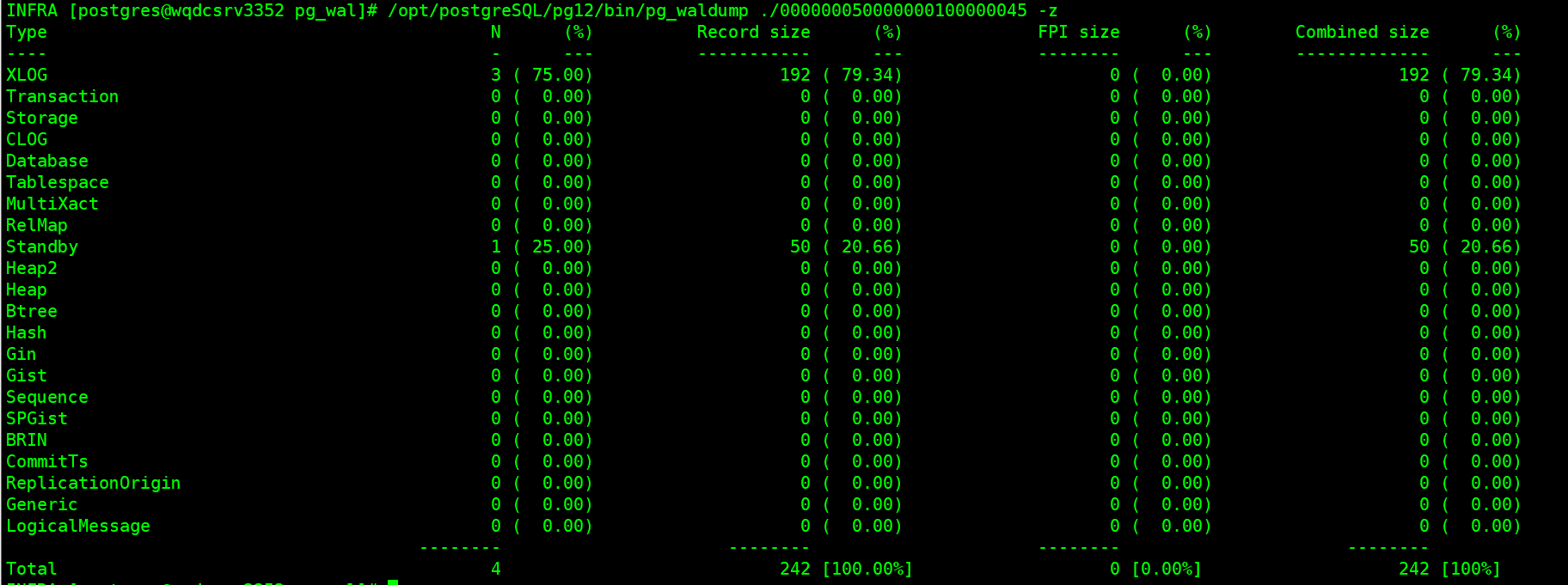

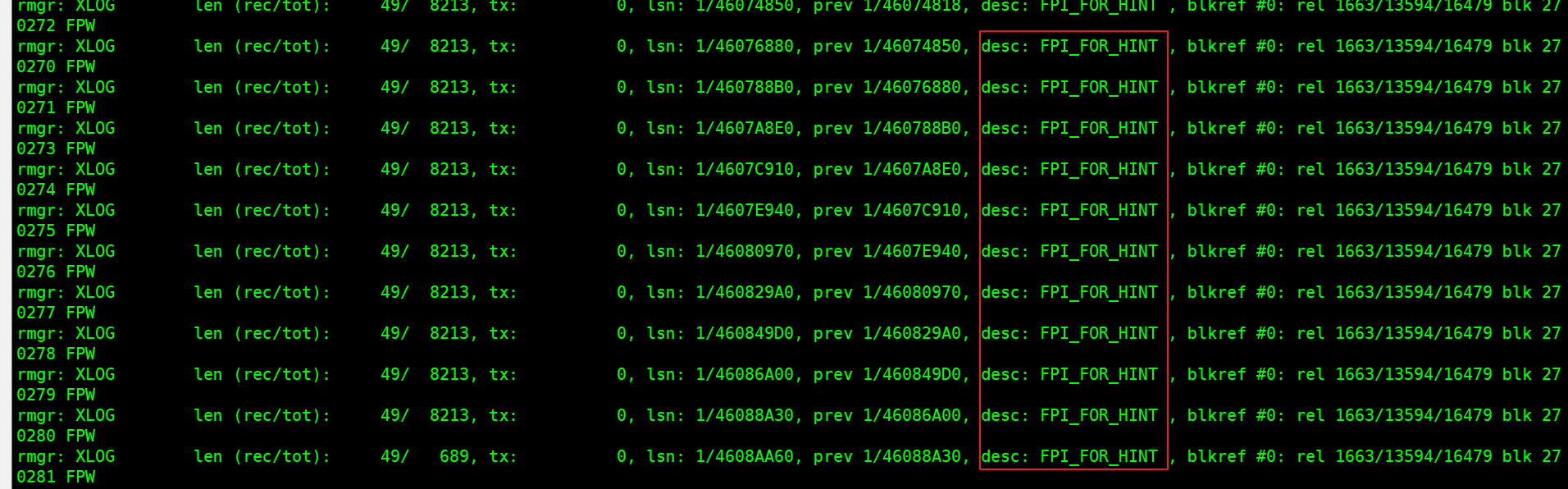

我们再来统计一下 wal 日志里面的类型汇总 : ./pg_waldump ./wal/00000001000001120000004e -z

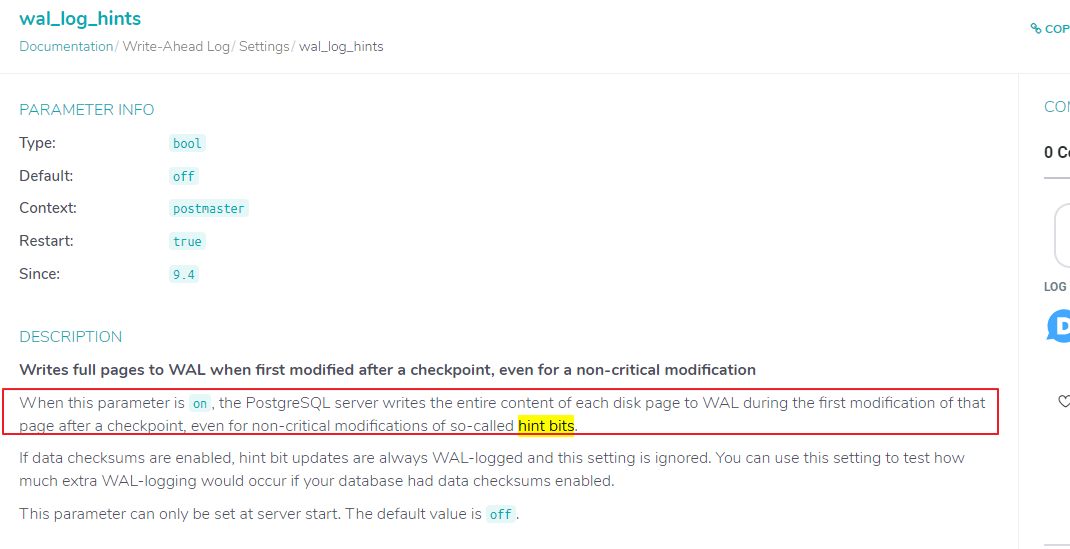

发现都是 full page writes (fpi ) , 写的都是: fpi_for_hint 是表里面每条记录 hint的标记。 这个与参数 wal_log_hints 参数有关,开启这个参数后会写wal里面写入额外日志信息。

我们去这个业务表上查询一下,是否有大量的插入和更新操作:

结果大大的出乎了我们的意料, 并没有存在大量的insert,update的操作。 只有600多条记录时今天insert或者update的记录。

db_tapp=# select count(1) from app_tapp_notification.t_notification_send_record where cdate > to_date('2023-03-31','yyyy-mm-dd');

count

-------

617

(1 row)

db_tapp=# select count(1) from app_tapp_notification.t_notification_send_record where edate > to_date('2023-03-31','yyyy-mm-dd');

count

-------

623

(1 row)

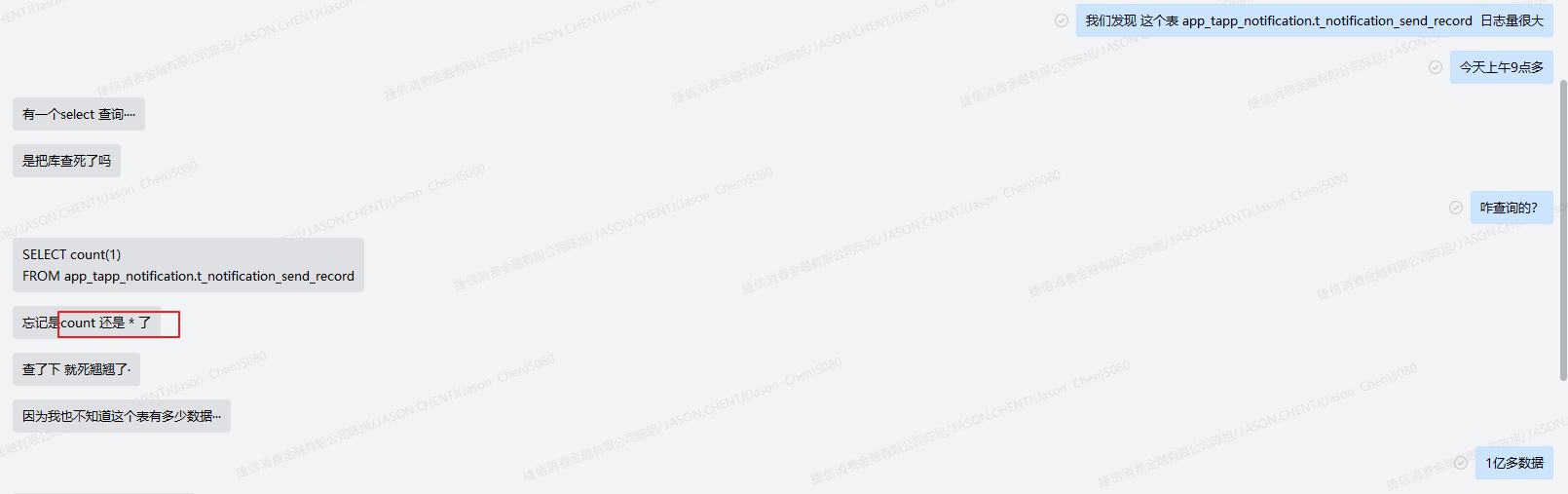

于是找到开发,咨询了一下上午是不是有什么业务发生了:

得到了结论开发执行了 select count(*) from app_tapp_notification.t_notification_send_record.

app_tapp_notification.t_notification_send_record 这个表的数据量大致是 1亿多:

那么问题来了,主库查询大表 select count(*) 是否会产生大量的wal日志呢?

oracle,mysql dba的思维是 不可能的…

难道pg有这个问题? pg和oracle,mysql的最大区别就是 mvcc的机制。。。潜意识中似乎问题在这个大方向上。。。。

继续google 一下: 看看有没有类似的文章.

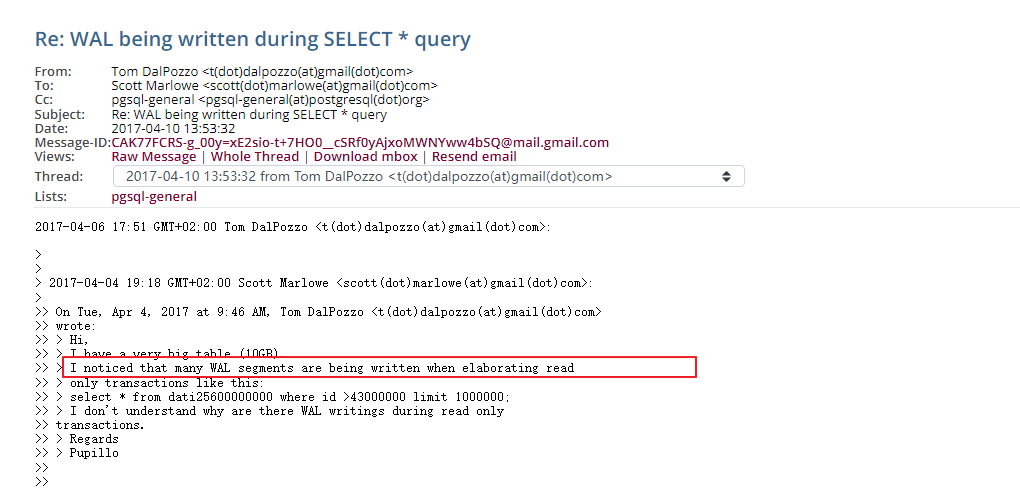

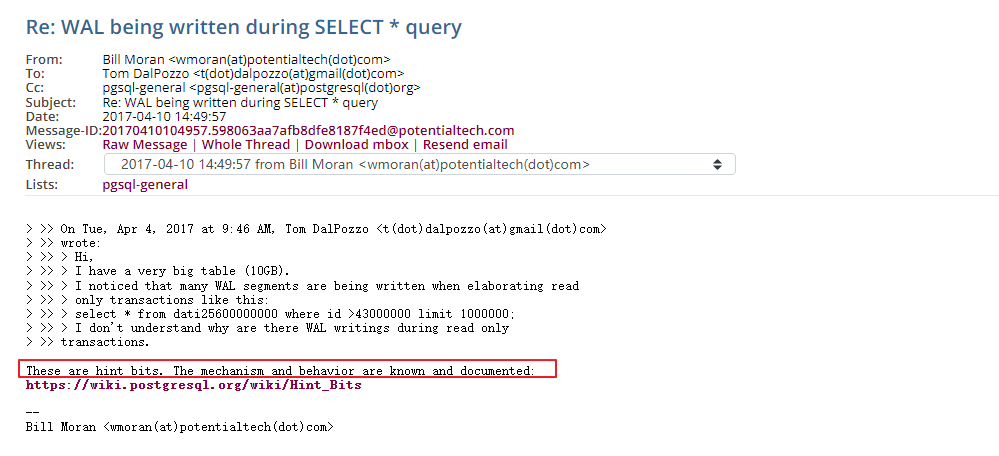

果然有人遇到了类似的情况:

https://www.postgresql.org/message-id/cak77fcrs-g_00y=xe2sio-t+7ho0__csrf0yajxomwnyww4bsq@mail.gmail.com

而且这个问题还有一个专业术语叫 hint bits:

我们查看一下 wiki: https://wiki.postgresql.org/wiki/hint_bits

select count(* ) 果然会更新 hint bits :will update its hint bits to match the commit/abort status of the inserting/deleting transaction(s) as of the instant of the examination. a plain select, count(*), or vacuum on the entire table will check every tuple for visibility and set its hint bits.

这个hint bits 是由于我们开启了参数 wal_log_hints = on , 从而在每条元祖上记录了额外的记录

src/include/access/htup_details.h

#define heap_xmin_committed 0x0100 /* t_xmin committed 256 */

#define heap_xmin_invalid 0x0200 /* t_xmin invalid/aborted 512 */

#define heap_xmax_committed 0x0400 /* t_xmax committed 1024 */

#define heap_xmax_invalid 0x0800 /* t_xmax invalid/aborted 2048 */

最后我们做一个实验在测试环境复现一下这个问题:

我们先关闭参数 wal_log_hints:

postgres@[local:/tmp]:2004=#34126 show wal_log_hints ;

wal_log_hints

---------------

on

(1 row)

postgres@[local:/tmp]:2004=#34126 select context from pg_settings where name = 'wal_log_hints';

context

------------

postmaster

(1 row)

postgres@[local:/tmp]:2004=#34126 alter system set wal_log_hints='off';

alter system

postgres@[local:/tmp]:2004=#34126 exit;

重启数据库生效:

/opt/postgresql/pg12/bin/pg_ctl -d /data/postgresql/2004/data restart

我们来创建一张大表:目标5000万的数据

postgres@[local:/tmp]:2004=#40524 create table tab_log_hint_off(id int, name varchar(200));

create table

postgres@[local:/tmp]:2004=#40524 insert into tab_log_hint_off select generate_series(1,50000000),'jason';

insert 0 50000000

我们记录一下当前的wal log的日志量列表: 当前的日志是 000000050000000100000045

-rw------- 1 postgres postgres 16m mar 31 16:26 000000050000000100000044

drwx------ 2 postgres postgres 8.0k mar 31 16:26 archive_status

-rw------- 1 postgres postgres 16m mar 31 16:26 000000050000000100000045

我们执行一下 select count(1):

postgres@[local:/tmp]:2004=#54829 show wal_log_hints;

wal_log_hints

---------------

off

(1 row)

postgres@[local:/tmp]:2004=#53719 select count(*) from tab_log_hint_off;

count

----------

50000000

(1 row)

postgres@[local:/tmp]:2004=#53719 exit;

infra [postgres@wqdcsrv3352 pg_wal]# ls -lhtr

-rw------- 1 postgres postgres 16m mar 31 16:26 000000050000000100000044

drwx------ 2 postgres postgres 8.0k mar 31 16:26 archive_status

-rw------- 1 postgres postgres 16m mar 31 16:26 000000050000000100000045

我们来观察一下 000000050000000100000045 这个日志: 并没有出现 fpi_for_hint

我们修改参数 wal_log_hints=on, 重启数据库:

postgres@[local:/tmp]:2004=#54829 alter system set wal_log_hints=on;

alter system

infra [postgres@wqdcsrv3352 archive_wals]# /opt/postgresql/pg12/bin/pg_ctl -d /data/postgresql/2004/data restart

重启数据库会触发checkpoint 操作, 自动切换到下一个wal: 生成新的000000050000000100000046

-rw------- 1 postgres postgres 16m mar 31 16:28 000000050000000100000045

drwx------ 2 postgres postgres 8.0k mar 31 16:28 archive_status

-rw------- 1 postgres postgres 16m mar 31 16:28 000000050000000100000046

再次执行一下 select count(1) ,

postgres@[local:/tmp]:2004=#65250 show wal_log_hints;

wal_log_hints

---------------

on

(1 row)

postgres@[local:/tmp]:2004=#65250 select count(*) from tab_log_hint_off;

count

----------

50000000

(1 row)

观察 wal日志时候: 发现文件的最后修改时间变化了 证明有wal log 记录写入

-rw------- 1 postgres postgres 16m mar 31 16:47 000000050000000100000046

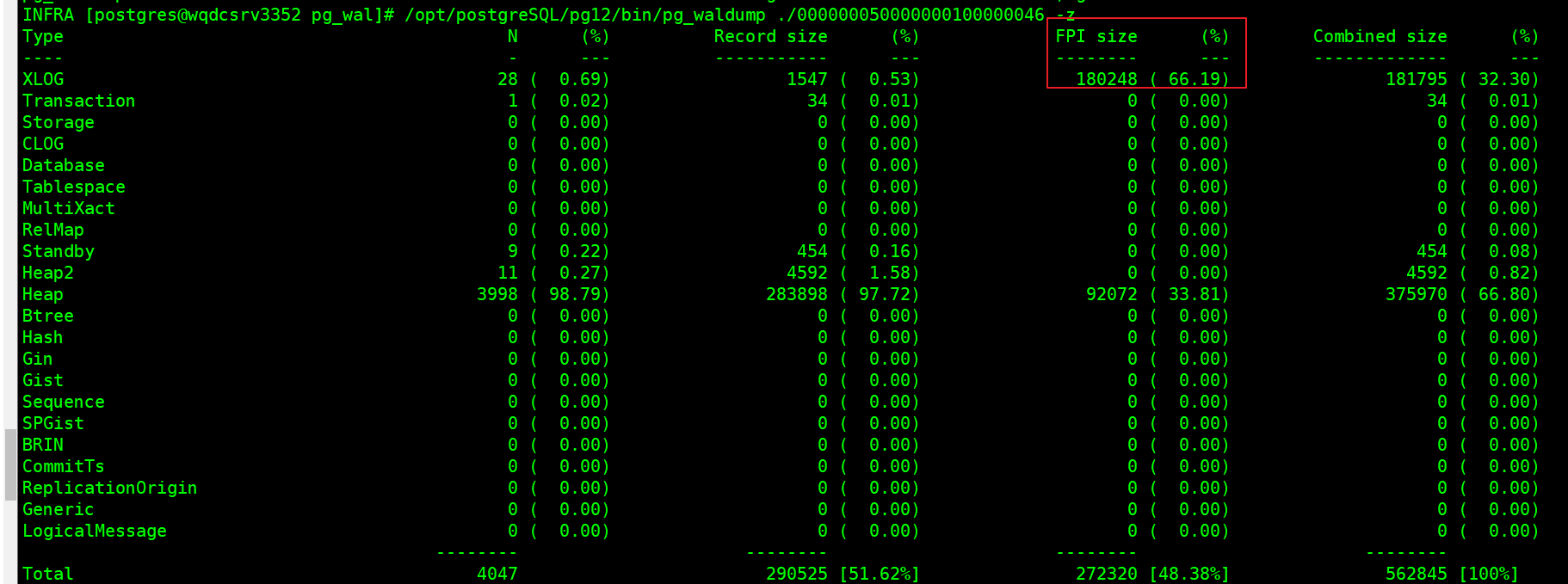

我们来分析一下 000000050000000100000046 的内容: 出现了大量的fpi_for_hint 是符合我们的预期的

最后总结一下:

1)开启参数wal_log_hints = on,会触发写 hint bits, 从而产生额外的wal日志。

这个参数wal_log_hints 开启的目的一般就是使用pg_rewind 重建standby数据库。 如果可以接受stanby 全部重建,可以不用pg_rewind,也就不需要开启这个参数wal_log_hints。 (默认值是off)

2)如果确实是需要pg_rewind这个操作,只能适当调大 wal相关的参数:

max_wal_size wal_keep_segments * wal_segment_size (p12以及之前的版本)

max_wal_size wal_keep_size (p13以及之后的版本)

或者 适当调大checkpoint 的时间,让full page writes 写的频率稍微慢一些, 起到一定的缓解作用。

3)开启压缩wal模式: wal_compression = on , 这个参数会压缩wal日志,从而会节省了wal的空间和网络传输带宽的流量,带来的副作用是cpu会有额外的消耗,

建议在开启这个参数之前,做一下性能测试的监控,确认开启后是否可以满足生产的要求

4)在从库上进行 select count(*), select * from 全表,大表等操作。最好是有一个独立的 standby database 作为独立查询节点。

have a fun 😃 !